Admin Tools Enhancement and Cost Optimization

Angular Django AWS Python

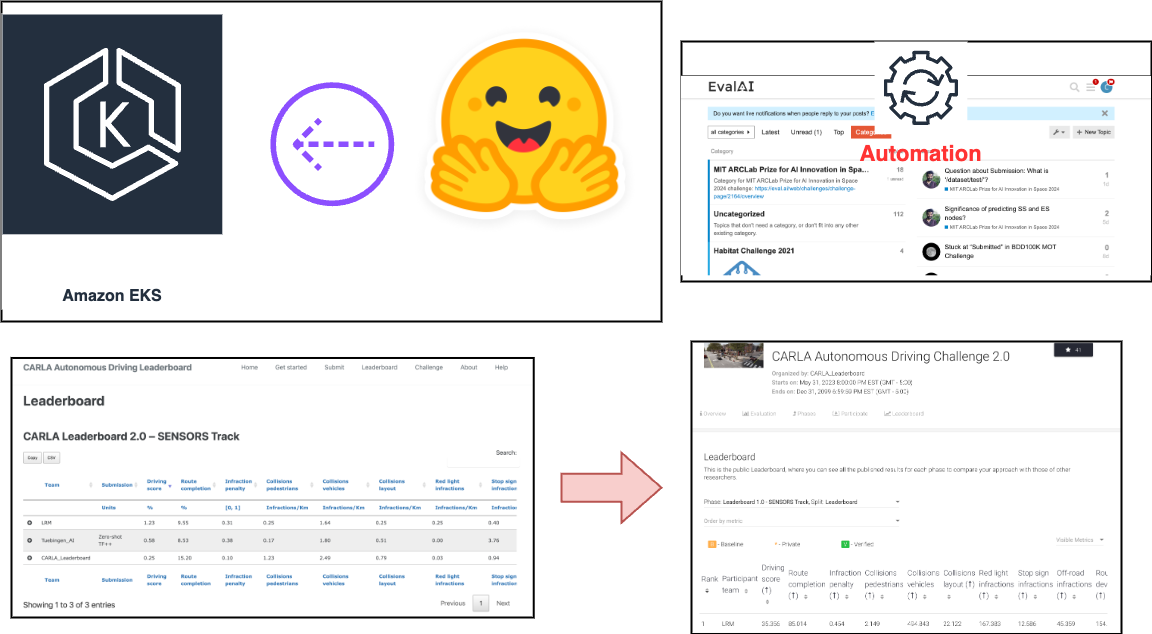

This project aims to elevate the admin experience on EvalAI while implementing cost-effective measures for platform maintenance. Key focuses include enhancing automation for managing expired submissions on SQS queues, identifying and optimizing ECS instances based on AWS health metrics, and providing convenient admin actions through Django administration. Cost optimization measures involve using custom SQS queue retention times, refining auto-cancel scripts, and identifying/removing unnecessary AWS instances and repositories. Additionally, the project aims to automate infrastructure monitoring for improved efficiency, making EvalAI administration seamless and cost-efficient.

Project Size: Medium (175 hours)

Difficulty Rating: Medium

Please wait for loading the projects ...