Adversarial Data using Gradio and EvalAI

Django AWS DevOps

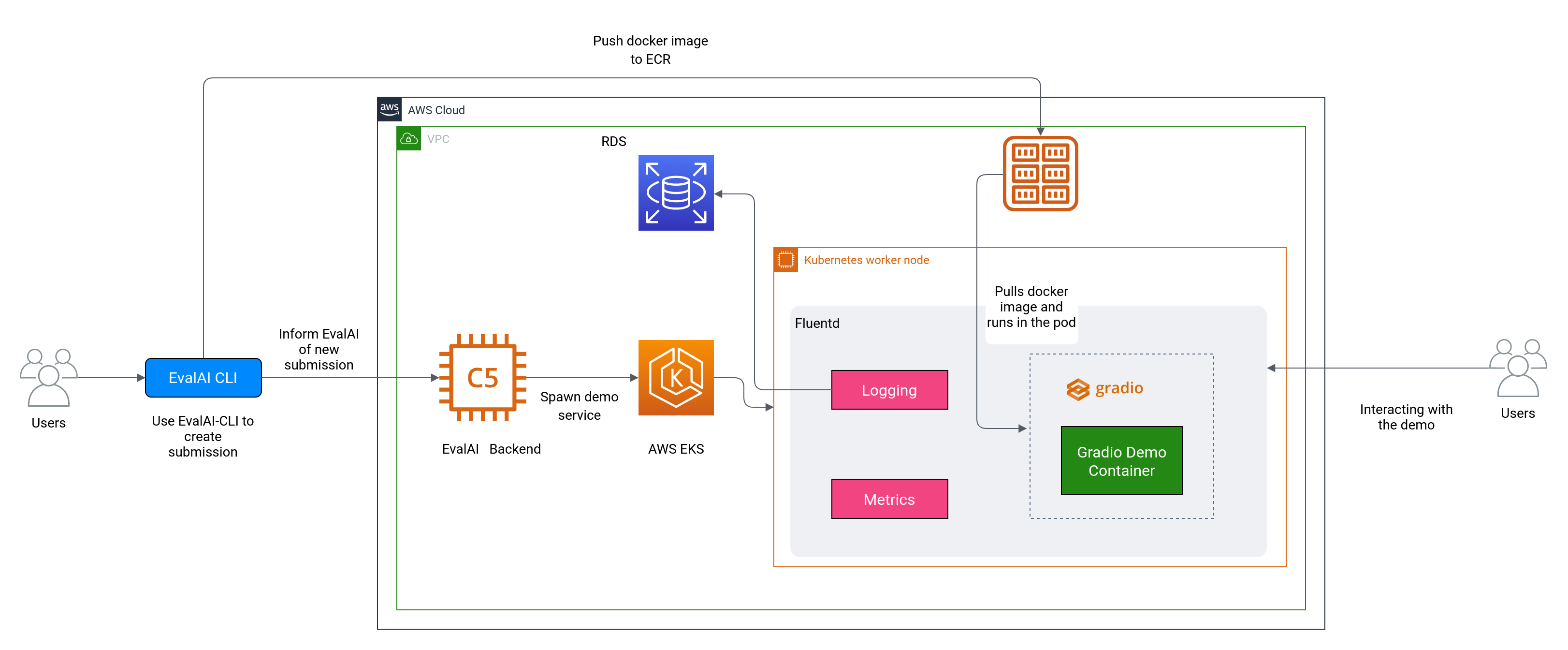

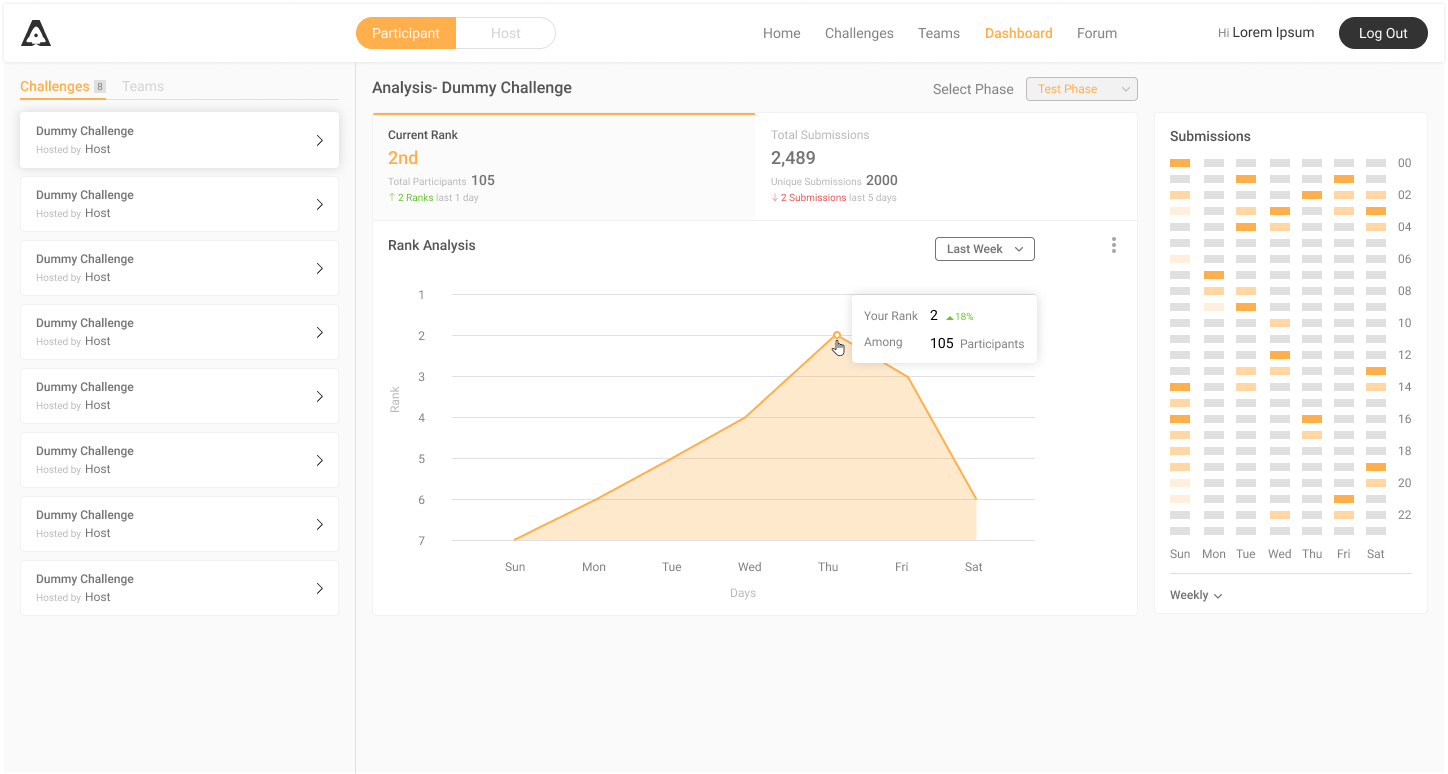

The aim of this project is to develop an infrastructure that enables the collection of adversarial data for models submitted to EvalAI. This will be achieved by integrating Gradio with EvalAI’s code upload challenge pipeline and deploying the models as web services. The web services will record all user interactions, providing a dataset for each submission that can be used to evaluate the robustness of the model.

Please wait for loading the projects ...