Enhanced Test Suite and Improved User Experience

SQL Django AngularJS AWS

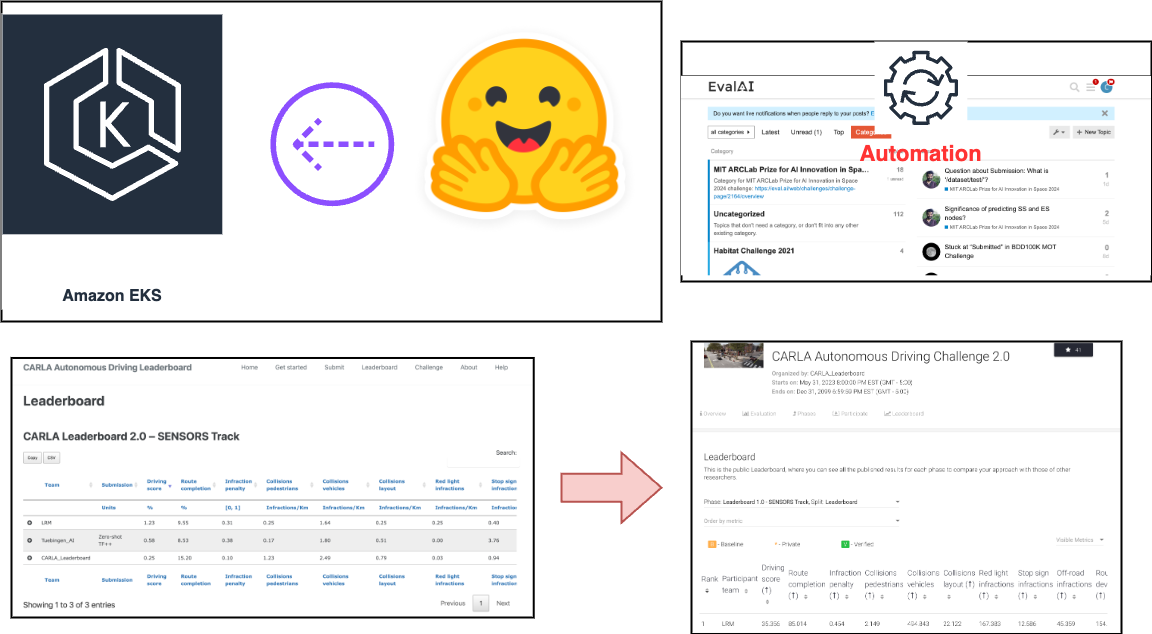

This project is focused on significantly improving EvalAI’s usability by enhancing exsiting comprehensive test suite alongside a series of user experience enhancements. By increasing our test coverage, automating critical workflows, and refining the platform’s interface and documentation, this initiative aims to create a more robust, user-friendly, and resilient environment for both challenge hosts and participants.

The enhanced test suite will ensure that all core functionalities, from challenge creation to submission processing are verified, reducing bugs and increasing system reliability. In parallel, targeted user experience improvements will simplify navigation, enhance error reporting, and streamline user interactions, leading to a more intuitive and supportive EvalAI ecosystem.

Project Size: Medium (175 hours)

Difficulty Rating: Medium

Please wait for loading the projects ...