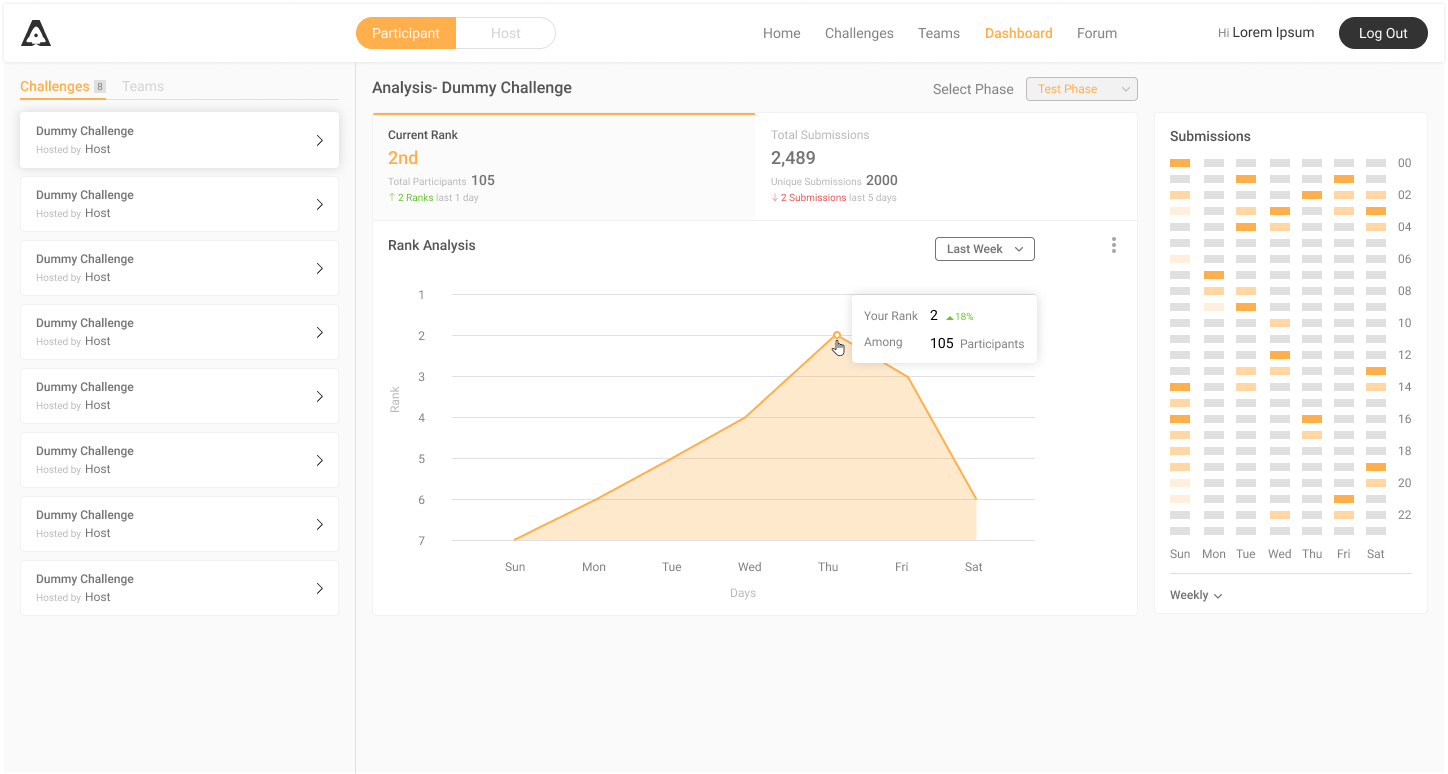

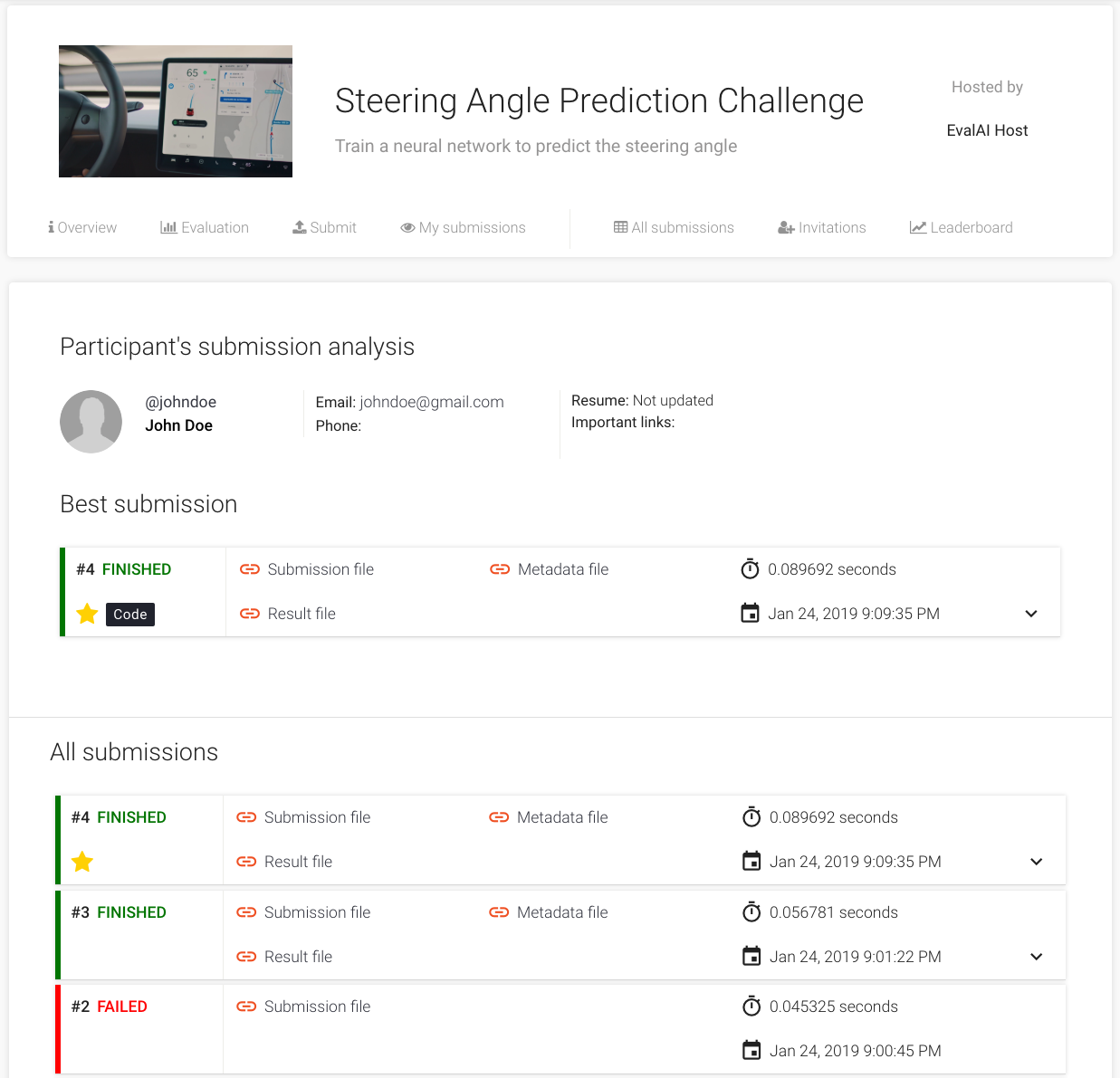

Analytics dashboards for challenge hosts and participants

Angular 7 Django Django Rest Framework D3.js

This project will involve writing REST API’s, plotting relevant graphs and building analytics dashboards for challenge hosts and participants. The analytics will help challenge hosts view the progress of participants in their challenge – for instance, comparing the trends of the accuracy from participant submissions over the period of time. Participants will be able to visualize the performance of all of their submissions with time and their corresponding rank on the leaderboard. The final goal is to provide users with several analytics to track their progress on the platform.

Please wait for loading the projects ...